Local Relation Networks for Image Recognition

code

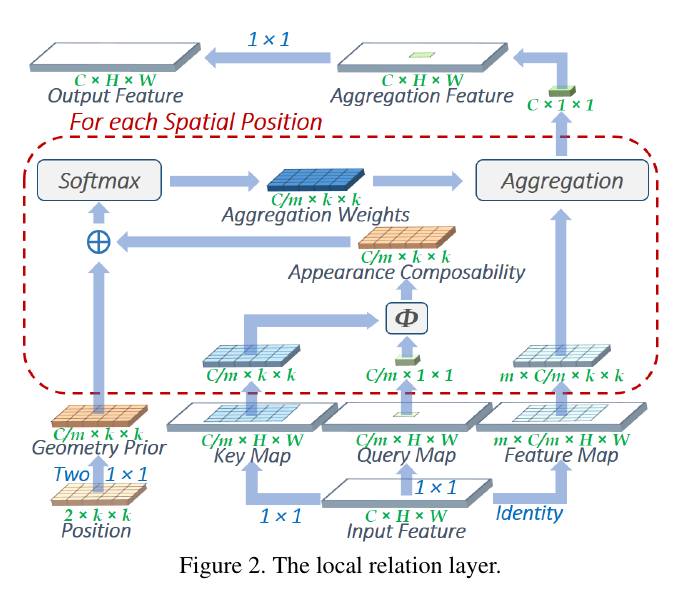

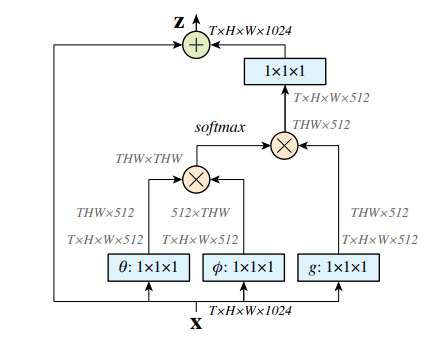

左图为LR, 右图为Non-Local.

Geometry Prior

可学习的先验特征。以某点为中心 $k \times k$ 大小的区域的到的几何先验,通过两个 $1\times1$ 卷积 中间加relu 得到。

Appearance COmposability

feat. 过两个 $1 \times 1$ 卷积得到Key 和 Q。V是直接identity得到,不过任何操作。Q是标量,表示当前 $(x,y)$ 点与 $k \times k$ 区域的相关性,两者相乘得结果与几何先验相加,送人softmax中得到Agreagation Weights. 该结果加权乘上 $k \times k$ 区域值,得到一个标量对应 $k \times k$ 的中心点的值为该值。最后进过 一个 $1 \times 1$ 卷积得到最终输出output feat.

1 | class LocalRelationalLayer(torch.nn.Module): |

Unfold

按stride,kernel_size来抽取feat中的数据,比如输入是(2, 64, 19, 19)大小的数据,torch.nn.Unfold(k, 1, 0, 1) 中会抽取 $k \times k$ 的通道全部数据 $ (k \times k \times C) $ 然后拼接到通道维度 一个win对应 $(64 \times k \times k)$ 。 一共有 $ [(H-k + 2 \times p) // stride + 1] \ tiems [(W-k + 2 \times p) // stride + 1] $ 个win. 故输出大小为 [2, 3136, 169]